|

Long-Nhat Ho Hi, I'm Long-Nhat (or you can call me Nhat). |

|

Research |

|

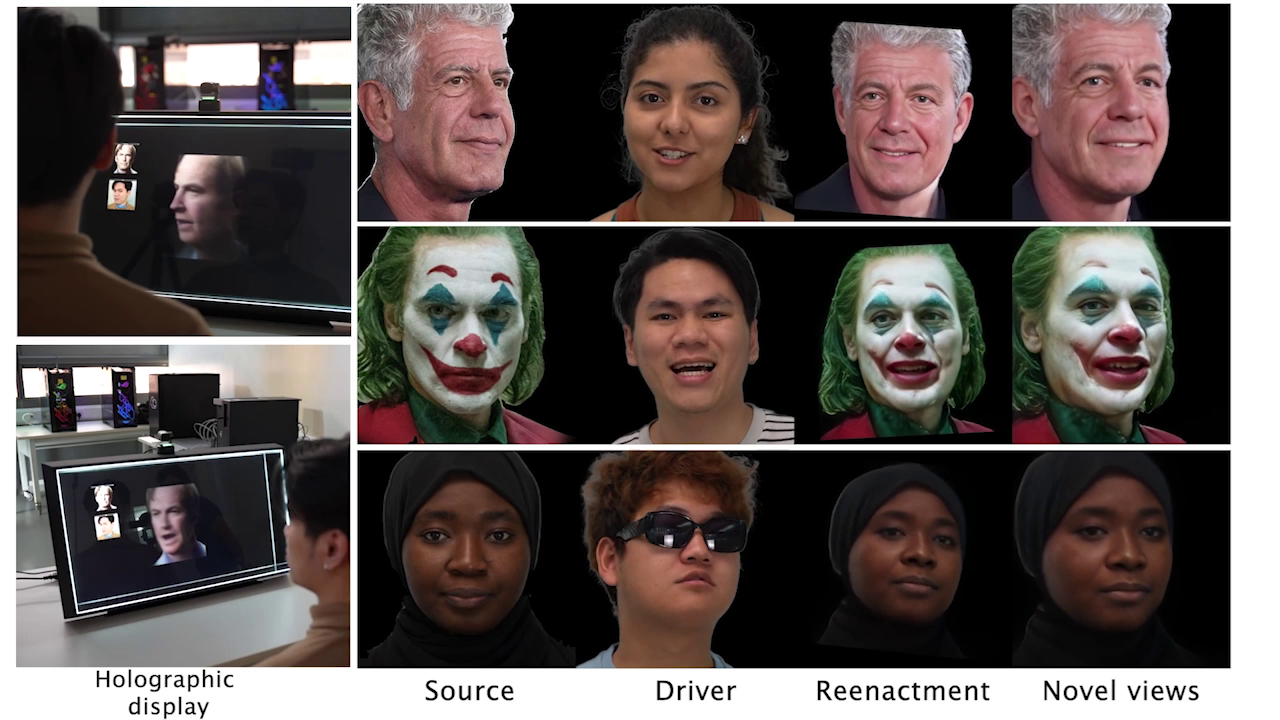

VOODOO XP: Expressive One-Shot Head Reenactment for VR Telepresence

Phong Tran, Egor Zakharov, Long-Nhat Ho, Liwen Hu, Adilbek Karmanov, Aviral Agarwal, McLean Goldwhite, Ariana Bermudez Venegas, Anh Tran, Hao Li, SIGGRAPH Asia, 2024 arXiv A 3D-aware one-shot head reenactment method that generates expressive facial animations from any input video and a single 2D portrait, requiring no calibration or fine-tuning. Our real-time solution ensures view consistency and identity preservation, demonstrated in monocular video and VR telepresence systems. |

|

VOODOO VR: One-Shot Neural Avatars for Virtual Reality

Phong Tran, Egor Zakharov, Long-Nhat Ho, Adilbek Karmanov, Maksat Kengeskanov, McLean Goldwhite, Aviral Agarwal, Ariana Bermudez Venegas, Anh Tran, Otmar Hilliges, Liwen Hu, Hao Li, SIGGRAPH Real-time Live! 2024 project page / video / arXiv A two-way immersive telepresence solution based on the state-of-the-art one-shot facial reenactment technology. |

|

VOODOO 3D: Volumetric Portrait Disentanglement for One-Shot 3D Head Reenactment

Phong Tran, Egor Zakharov, Long-Nhat Ho, Anh Tran, Liwen Hu, Hao Li, CVPR, 2024 project page / video / arXiv A high-fidelity 3D-aware one-shot head reenactment technique. Our method transfers the expression of a driver to a source and produces view consistent renderings for holographic displays. |

|

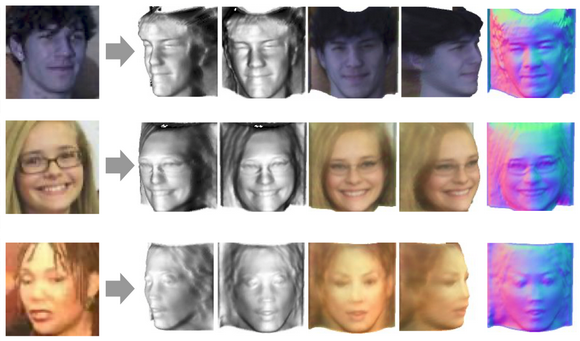

Toward Realistic Single-View 3D Object Reconstruction with Unsupervised Learning from Multiple Images

Long-Nhat Ho, Anh Tran, Quynh Phung, Minh Hoai ICCV, 2021 project page / arXiv 3D object reconstruction from a set of images |

|

Design and source code from Jon Barron's website |